← Blog

Image to Design

On the 18th of November 2025, Google dropped Nano Banana 2. As soon as I saw it, it was clear that the way people make designs is going to change.

A nano-banana-2 image generated with the help of Gamma's scaffolding

It's slow and expensive (about 16 seconds and 13 cents for a 1MP image). But cheaper alternatives like z-image-turbo and flux-klein-9B already work well, and costs will keep falling.

When someone wants to create a social post, a slide deck, a poster, or any other kind of visual communication piece - the ability to describe what you want in plain language and get back a great looking faithful rendition is obviously desirable. But right now it still comes with a catch.

Image-based vs. code-based

There's two main approaches to getting AI to create and edit designs. The image-based approach (generate pixels directly) and the "code-based" approach.

When I say “code-based” I mean an LLM generating HTML+CSS, SVG, or some other structured language that positions individual elements on a canvas (like text blocks, images, shapes).

Both techniques have different strengths and weaknesses:

| Image models | Code models | |

|---|---|---|

| Visual expressiveness | Overlapping elements, illustrated flourishes, mixed-media textures | Designs feel generic/templated |

| Cleanliness | Everything is grainy and approximate | Crisp text, perfect alignment, exact colors, sharp at any zoom |

| Compatibility with other editors | Locked into image-only editing | Structured output can be converted to native elements in other design software |

| Controllability | Inpainting or full regeneration, unpredictable, can introduce unwanted changes | Targeted edits without corrupting the rest of the design |

| Baseline quality | Even mediocre generations look passably designed - colors harmonize, layout feels composed | Text can overflow off-screen, elements overlap unreadably, font/color choices can clash |

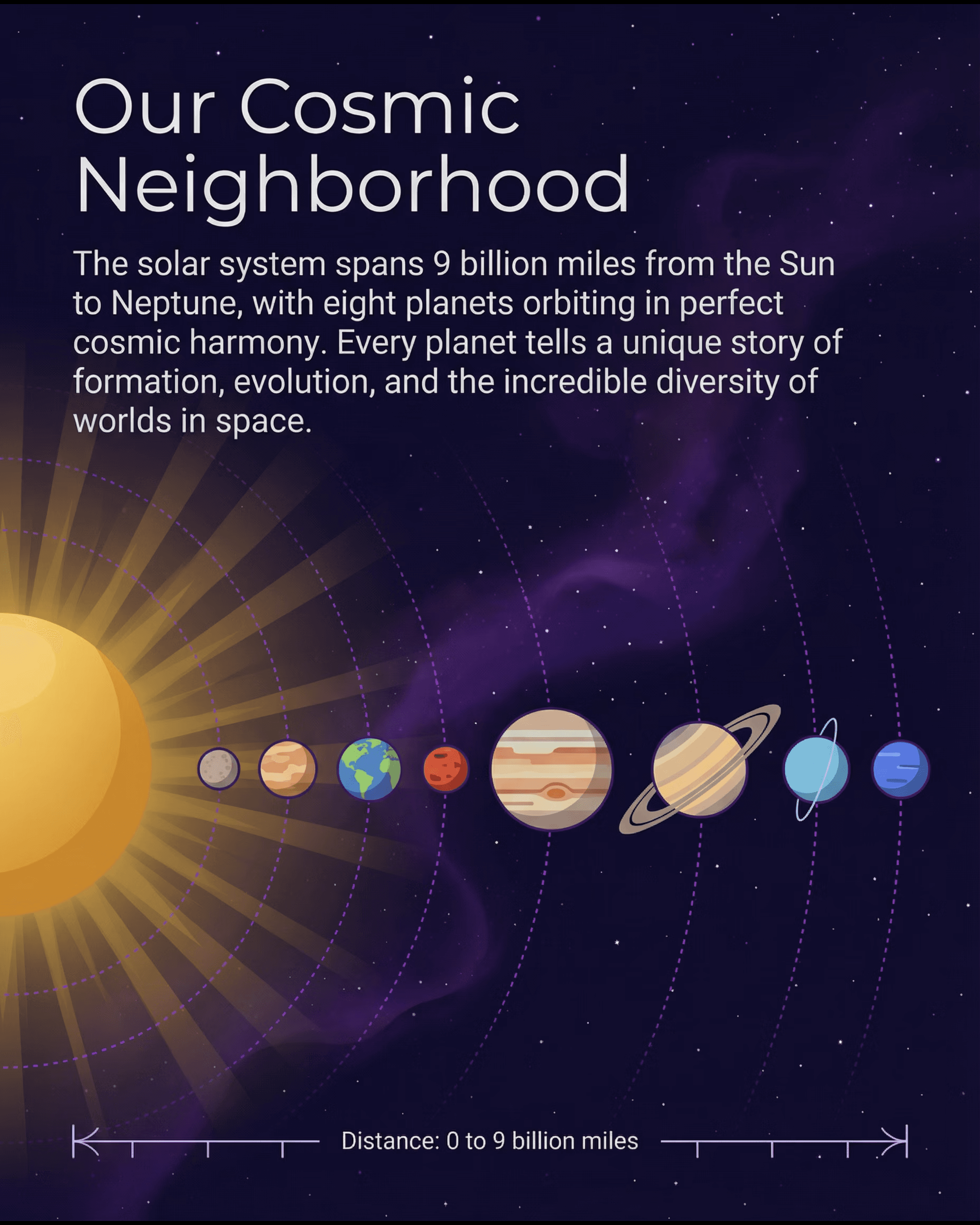

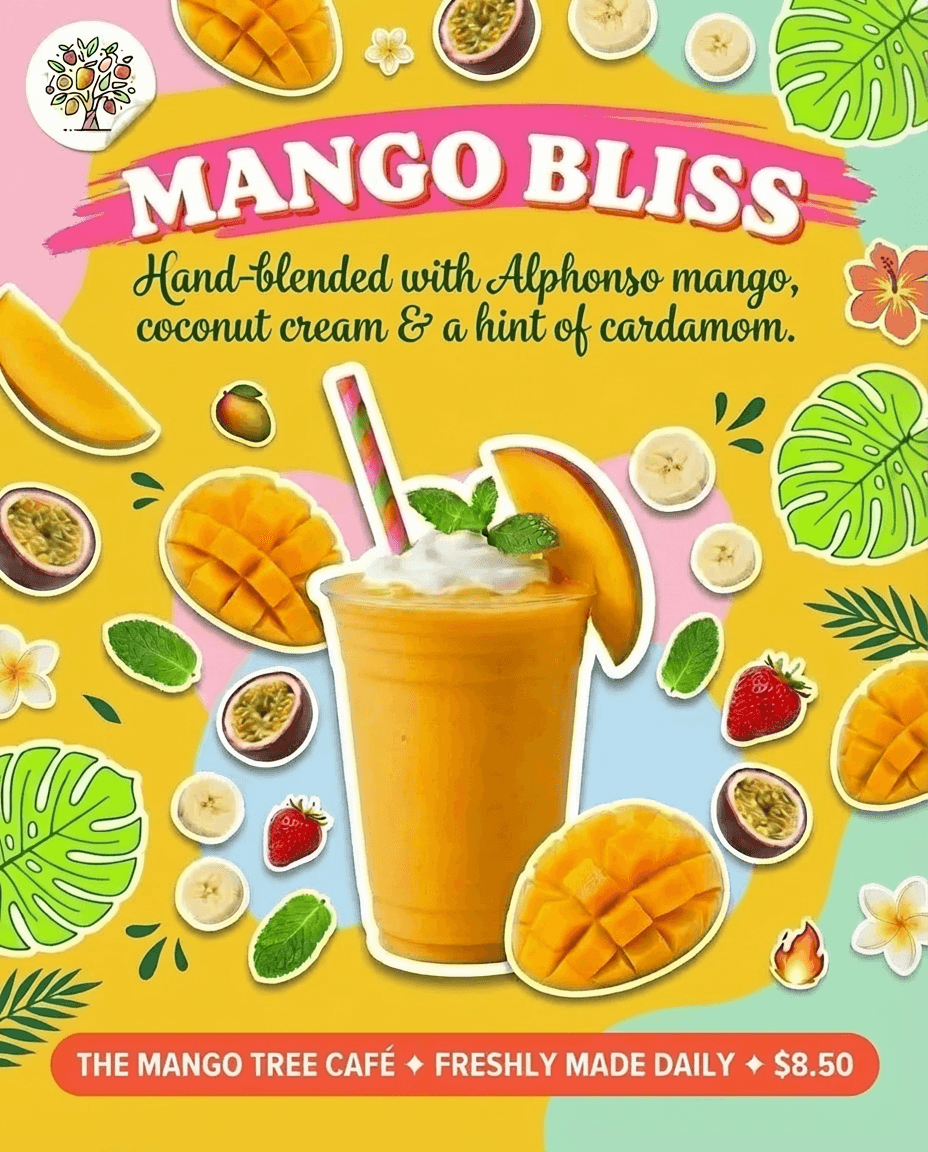

Code model

Image model

Both sloppy in their own special way.

As the models improve, these downsides will soften. But as long as both kinds of model continue to get better, the tradeoff will still exist.

Can we have both?

It would be so nice if we could somehow have our cake and eat it too.

When working with image models, trying your 5th different prompt to just shift the text a little bit downwards is maddening. But in the right context, image-based design editing feels unreal - when it hits, it hits hard.

At the same time, WYSIWYG drag-and-drop editing is still the natural interface for heaps of design tasks. Even with the advent of AI, there's a lot to love about the Canva editor.

The best possible design experience is one where we have a seamless bridge between the element domain and the pixel domain, at low latency. If we had this, the experience of creating designs could be so easy and so fun.

Imagine having a brilliant AI assistant that can create almost anything for you - and still being able to make adjustments with your traditional tools, manage your existing templates and assets, design with precision, and print or display at any size without degradation. All without everything looking like a vibe-coded Claude UI.

I believe it's possible to build the pixel <-> elements bridge that would make the dream-come-true design editing UX possible. So that's what I'm working on right now.

If you want to work on this with me, or know someone who might, please reach out on LinkedIn or at xavier.orourke@gmail.com.